Tea Harvesting Robot

Video

Overview

A human-robot cooperative vehicle has been developed for tea plucking to solve the problem of the lack of labor in the tea industry. A cooperative framework, instead of a fully autonomous solution, was proposed to leverage human expertise in tea harvesting and power assistance to handle the tea cutting apparatus. The method of side-by-side walking was used to implement the collaboration between the human and robot when carrying a tea cutting apparatus to harvest tea leaves along tea rows. For the experiments, the semiautonomous vehicle was implemented to be lightweight, of compact size, and driven by tracked wheels. The experimental results showed that the human could easily guide the tea plucking machine to harvest tea because the robot shares most of the weight of the machine. The proposed cooperative working robot could be particularly effective in improving the working conditions of farmers during small scale tea harvesting on tea plantations.

To solve the problem of turning in a limited space between rows of tea trees while satisfying the limitation of nonholonomic constraints and soil mechanism, the characteristic of Reeds and Shepp curve has been utilized and a path planning algorithm has been proposed. Also, to improve the trajectory tracking performance, an adaptive law for slippage estimation was implemented to compensate for the slippage and increase tracking accuracy.

Skills & Abilities

-

ROS and Linux

-

Programming (Python & C)

-

Matlab & Simulink path planning simulation

-

Computer Vision

-

Lidar (object avoidance & SLAM)

-

circuitry and electrical wiring

-

Mechanisms design and fabrication

Related Projects

Functional Requirements

<Following mode>

1. Autonomously follow the human worker

2. Static object avoidance

<Auto-Turning mode>

1. Generate a collision-free path using

Reed & Shepp curve

2. Trajectory tracking with slippage compensation

Hardware Setup

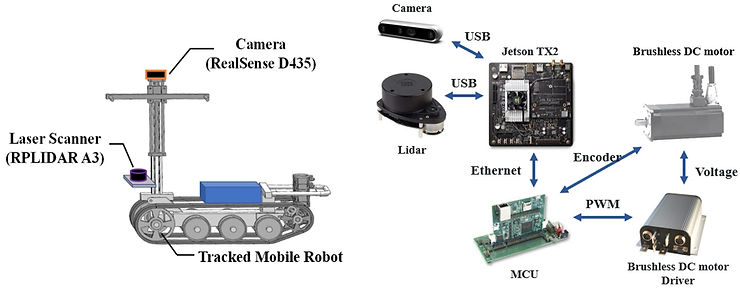

Our system is based on a lightweight tracked vehicle equipped with an RGBD camera and a two-dimensional laser scanner. The camera (RealSense D435, Intel Inc., USA) has a range of up to 10 m and offers accurate depth information when the object is moving; it was used to detect the marker attached to the human in this research. The marker consists of several AR tags and is tracked by the ar_track_alvar software package, a Robot Operating System (ROS) wrapper for Alvar. Alvar is a tag identification method that can deal with different light conditions with adaptive thresholding and provides stable pose estimation. The laser scanner (RPLIDAR A3, Slamtec Co., Ltd., China), with at most a 25 m range radius, is ideal for both outdoor and indoor environments. We used the laser scanner to detect rows of tea trees to make sure the vehicle remains in the path of the plantation. The laser scanner can also be used to avoid static obstacles.

Materials and Methods

Following Mode

[Row-following schematic diagram]

[Algorithm framework]

Tree Rows Detection

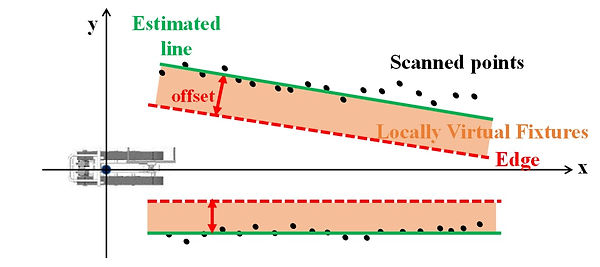

After Lidar detects the braches and stems, we can extract the points which is the closest to the robot. Then, we can use RANSAC-based line fitting to estimate a line which the robot must not pass. Next, by setting an offset, Virtual Fixtures can be determined. "Virtual Fixtures" can assure the robot not entering regions that are full of obstacles. Therefore, the robot will follow the movement of the human worker if it is not in the virtual fixtures. On the other hand, if the robot enters the Virtual Fiture, it will adjust the direction and try to get back to the safe region.

Autonomy Assessment

The robot conducts autonomy assessment and adjustment according to the relationship between robot and virtual fixtures and the relationship between humans' pose and the workspace.

Using the Autonomy Assessment Function, we can adjust the policies accordingly.

When A(t)=1, the robot will be fully-Autonomous. In this state, when the robot is about to move into the virtual fixtures, the robot will move toward safe regions autonomously.

On the other hand, when A(t) =0, suspension policy will be activated. Under this policy, the autonomy assessment reaches to 0, and the robot stops moving.

Lastly, when A(t) is between 0 and 1, it will enter collaboration policy. That is, the robot will keep side-by-side with the human.

Field Test Results

To verify the feasibility of the cooperative control scheme, tests were taken in a tea plantation of the Tea Research and Extension Station, Executive Yuan, Taiwan.

Both the human and the robot were static and abreast with each other at first. They then started moving forwards for 3 meters. The tree rows detected were nearly consistent with the occupancy grid map built offline and free of minor branches and weeds on the path.

Also, workload carried by human workers had been analyzed. The figure on the left shows the load for individual workers without the robot. The figures on the right demonstate the difference after collaborating with the robot. Apparently, by using the robot, the load for human workers can be greatly reduced.

Auto-Turning mode

This part will focus on the path planning and trajectory tracking for the tracked mobile robot to turn between rows of tea trees. Since the space between the tea trees are very limited for the vehicle to perform a turn, a proper configuration path planning must be implemented.

Assumption 1: The minimum distance between obstacles is less than the width of tracked mobile robot 2b.

With assumption 1, it is proved that there will always be at least one solution for the robot to achieve the goal position. First, the goal position was defined as q_goal=[x_g, y_g, phi_g]^T. It will soon be replaced by a modified goal position q_mod=[x_g/R, y_g/R, phi_g]^T, where R is the minimum turning radius. This step can make the implementation of the Reeds and Shepp curves algorithm become much easier, for it only needs to calculate for the length [t', s', v'] for each curve. Adopting one of the solutions, the path that takes the vehicle to q_mod can then be determined. Changing the goal position back to q_goal, the length for each curve is calculated by [t, s, v]=[t'xR, s'xR, v'xR]. Then, configuration path planning can be subsequently implemented.

[Unit circle turning radius]

[minimum turning radius]

[Flow Chart]

To track the desired trajectory, we used a state feedback controller combine with a feedforward command.

[Trajectory tracking controller block diagram]

Simulation model

In this section, we used Simulink to construct a simulation model to verify the feasibility of the proposed method. To satisfy the geographical constraint of the tea fields, we must first simulate and design a suitable path of the robot. The simulation was useful when it comes to predicting the position of the robot. In addition, it can help us design the path of the robot and save us the time to actually put the mobile robot on the ground to see the result of the path we designed.

To model the behavior of the vehicle, some parameters must be assumed in the simulation. The speed difference of the two tracks was set to be 60%. Applying the kinematic model and the longitudinal slip coefficient derived in the previous part, the rotation speed of the tracks can be converted into the speed of the vehicle in the global coordinate.

The physical parameters of the TMR are set as follows: b=0.15m, r=0.075m, rho_1=20, rho_2=20, eta=1, k_b=14. The initial conditions are chosen as follows: a_L(0)=a_r(0)=1. Taking the average slippage from a series of experiment, we set the slipping parameters in the simulation to be i_L=0.964, i_R=0.972.

Field Test Results

[Simulated path vs actual path]

[Actual path overlap with the map constructed by Lidar]

Using the data collected from Lidar, the map was created by a ROS package called hector_mapping, and the position data of the robot is overlapping on the map. The white areas were judged as reachable areas for the vehicle. On the other hand, the gray areas were the locations where the laser beam of Lidar couldn’t reach. The black dots in the figure is the obstacles detected by Lidar, which in fact is the stems of the tea trees. We can see in the figure that the vehicle had successfully make the turn between two corridors without bumping into any obstacles.

Conclusion and Future work

In this research, a human-robot cooperative vehicle has been proposed to help harvesting teas and resolve the problem of labor shortage in the tea industry. The robot requires autonomous turning between rows of tea trees when reaching the end of the corridor. Due to the nonholonomic constraints and the dynamic constraint from soil mechanism, it is difficult to obtain the actual minimum turning radius of the robot. To be able to turn between rows of tea trees, path planning and trajectory planning algorithms had been implemented on the tracked mobile robot. The controller used to track the desired trajectory is based on a linear feedback law combine with a feedforward command. The experimental results obtained in a real tea plantation demonstrated the feasibility of this proposed system.

For future work, using algorithms to determine the waypoints placing within the path is necessary for the system to become truly autonomous. Finally, all of the experiments were conducted on tea filed located on a plain, while there are a plenty of tea fields located on hills in Taiwan. If we can put the robot on hills to test the feasibility, then the adaptability will greatly increase and this robot will become more helpful in Taiwan.

Supplementary Materials

Taiwan International Agricultural Machinery and Materials Exhibition

The picture on the right is me and our stall at the Taiwan International Agricultural Machinery and Materials Exhibition. When I was displaying the robot during the exhibition, dozens of local farmers expressed an interest in purchasing it, highlighting the urgent need for robots to supplement the need for laborers. This experience inspired me to pursue a graduate degree and a career in robotics, and to use my knowledge to help solve this serious problem.